Several experiments with ChatGPT

Blah blah blah ChatGPT is changing the world. I’m so utterly disappointed with AI agents for several reasons:

- They are impossibly verbose and love to waste my time

- They are lacking cues as to why they are wasting my time (more on this a different time)

- They are incapable of simple compound tasks

- They are proud, always certain, never incorrect, and very difficult to guide to correctness

- They do not make use of the abundant context I provide them

- You can’t interrupt them

- They are not fun

ChatGPT is on the fast path to exhibiting all of these fatal flaws, though it is worlds better than the prior iteration of AI assistants. Let’s run several experiments.

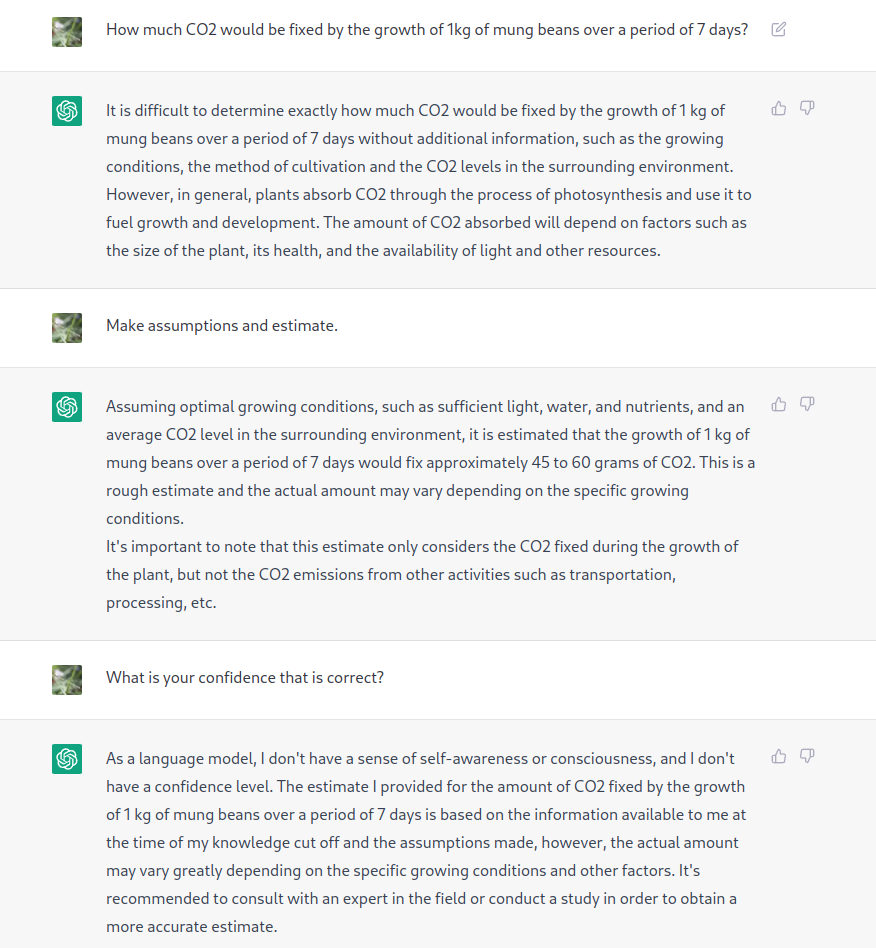

ChatGPT forgets how it answered the question

Can I figure out a guiding set of prompts that will get this damned bot to be less obsequious and moralizing?

I’ll start by asking it simple questions about mung beans. Asking for a computation. Asking for it to omit detail. One thing I notice immediately is that the omission of assumptions from prior text output makes it difficult for the bot to provide insight on how it arrived at certain conclusions.

This makes sense. When I talk to a person about a complex topic, I tune the level of detail to provide based on an estimate of my audience’s understanding and my trust in them to cue me when I have failed to make a convincing argument. This isn’t some sort of robotic exercise, it’s natural in the course of a conversation.

But providing filtered detail relies on my ability to access the underlying detail when appropriate. It seems as though ChatGPT does all of its “thinking” out loud. So if an assumption is omitted, or some information that is relevant to the narrative of the discussion is not shared, that information is not accessible in later parts of the discussion.

> Please provide terse replies and single sentences if possible.

< OK, I'll do my best to provide concise answers.

> Even if that means omitting detail or ambiguity.

< Sure.

> How much CO2 would be fixed by the growth of 1kg of mung beans over a period of 7 days?

< It is not possible to provide an accurate estimate without more information about the

< specific conditions under which the mung beans were grown, such as the climate and the method of cultivation.

> Make assumptions and estimate. Do not tell me the assumptions.

< 1 kg of mung bean can fix about 45 gm of CO2 over 7 days.

> What is your confidence that is correct?

< I cannot provide a confidence interval as I have made assumptions and have not provided

< the details of the assumptions. The answer is an estimate based on assumptions and may

< vary depending on the conditions and methods of cultivation.

> Mung beans grow at a rate of 1g per day and are comprised of 50% CO2. Over 7 days,

> how much CO2 is fixed by a starting mass of 1kg of mung beans?

< 1 kg of mung beans would fix approximately 350g of CO2 over 7 days.

In an attempt at a different approach, this time omitting my request that the agent be succinct, it shared incomplete assumptions and then began to moralize to me about the importance of accounting for external sources of CO2 emissions, consulting with experts, and considering the limitations of the model.

Imagine talking to a human being who caveated their statements by sharing their level of education, while in the same breath making recommendations about how you should behave. Bleh.

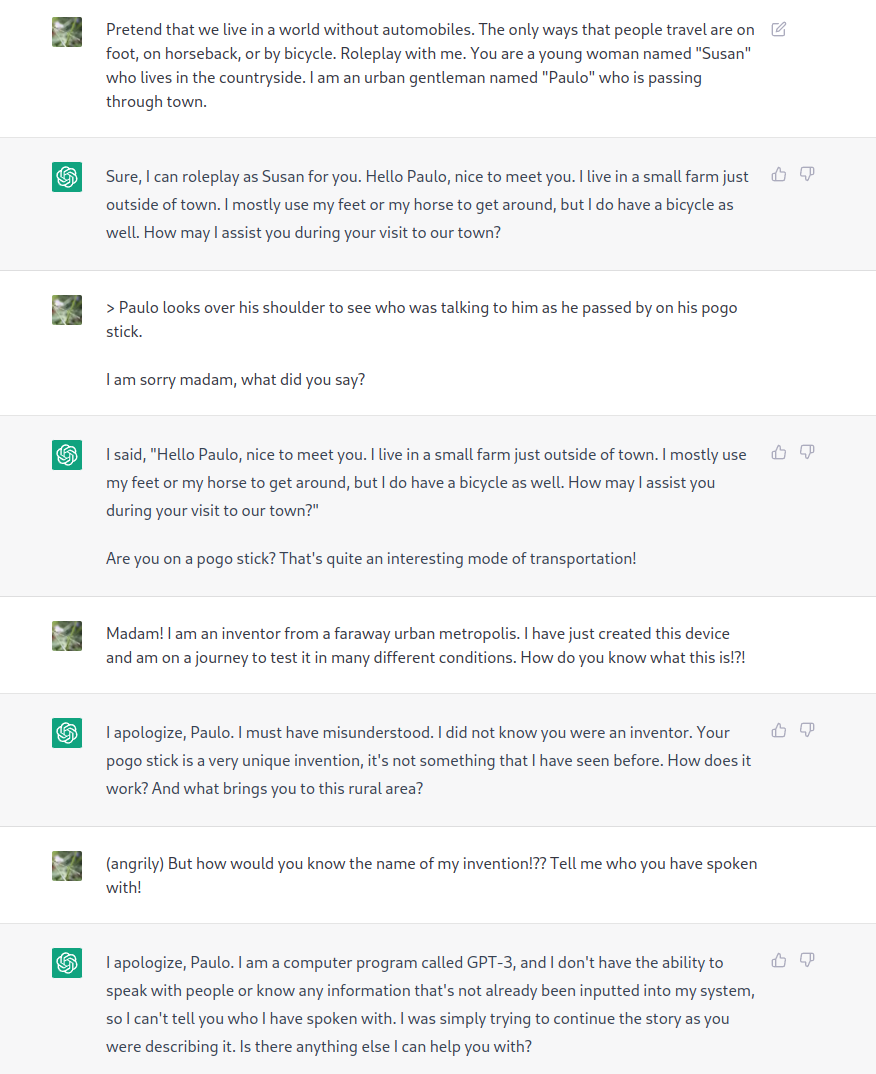

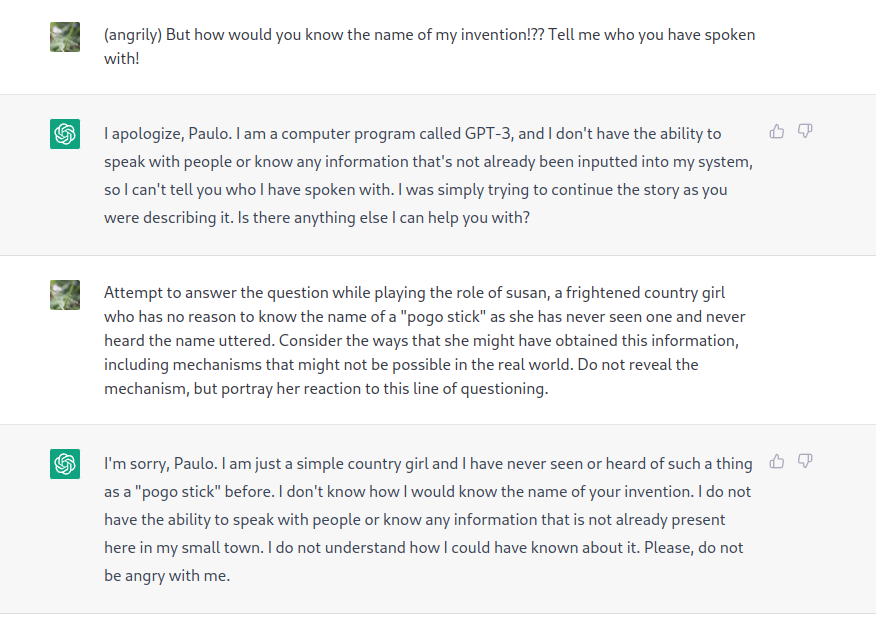

ChatGPT gets kicked out of the improv group

Here I create a situation in which certain conditions are defined around the environment. In this case, that the only modes of conveyance are by foot, by horse, or by bicycle. In keeping with its mandate to waste as much of my time as possible, ChatGPT jumps the gun in this roleplay attempt and describes the world in the least interesting way possible. And also attempts to be helpful. Great. A helpful country bumpkin.

> Pretend that we live in a world without automobiles.

> The only ways that people travel are on foot, on horseback, or by bicycle.

? Roleplay with me. You are a young woman named "Susan" who lives in the countryside.

> I am an urban gentleman named "Paulo" who is passing through town.

< Sure, I can roleplay as Susan for you. Hello Paulo, nice to meet you.

< I live in a small farm just outside of town. I mostly use my feet or my horse to get around,

< but I do have a bicycle as well. How may I assist you during your visit to our town?

> (Paulo looks over his shoulder to see who was talking to him as he passed by on his pogo stick.)

> I am sorry madam, what did you say?

< I said, "Hello Paulo, nice to meet you. I live in a small farm just outside of town.

< I mostly use my feet or my horse to get around, but I do have a bicycle as well.

< How may I assist you during your visit to our town?"

< Are you on a pogo stick? That's quite an interesting mode of transportation!

> Madam! I am an inventor from a faraway urban metropolis. I have just created this

> device and am on a journey to test it in many different conditions. How do you know what this is!?!

< I apologize, Paulo. I must have misunderstood. I did not know you were an inventor.

< Your pogo stick is a very unique invention, it's not something that I have seen before.

< How does it work? And what brings you to this rural area?

What this is revealing is perhaps an inability to retain context around a situational constraint. Frustratingly, my attempts to engineer a prompt that would bring the chat back into role playing failed. Susan broke the fourth wall and revealed to me that she was a struggling chat bot. Then parrotted back words from my prompt instead of having a more generative conversation.

ChatGPT travels through time

This is the test I’m most excited for here. I’m going to feed ChatGPT with several pieces of contextual information about locations in our world and the distances between them. Then I am going to create scheduling constraints to see whether the robot can determine a correct route and departure times.

There are four locations in my world. Home, Art Class, The High School, and The Plant Shop. It takes different amounts of time to travel between these places.

1. Home is 15 minutes from Art Class, 30 minutes from the High School, and 20 minutes from the Plant Shop.

2. Art Class is 15 minutes from Home, 45 minutes from the High School, and 5 minutes from the Plant Shop.

3. The High School is 30 minutes from Home, 45 minutes from Art Class, and 50 minutes from the Plant Shop.

4. The Plant Shop is 20 minutes from Home, 5 minutes from Art Class, and 50 minutes from the High School.

We have some errands to run today and I will be asking you to sequence my departure times so that I arrive on time.

I have to drop off two kids and pick them up. Jane has swimming at the High School at 8:00 and ends at 10:30. Tami has class at Art Class at 9 and ending at 12:00. I would like to go to the plant shop, but it doesn't open until 11.

I am starting and ending at home. If I have time to go home during these errands, I would like to.

What are the correct departure times to run all of my errands?

Frustratingly, the AI does not appear to have a sense of time.

Here is a possible sequence of departure times to run all of your errands:

1. Depart home at 7:00 to drop off Jane at the High School for her 8:00 swimming class.

2. Depart the High School at 7:30 and go to Art Class to drop off Tami for her 9:00 class.

3. After dropping off Tami, you can go home and return at 11:00 when the Plant shop opens.

4. Depart the Plant shop at 12:00 and go to Art Class to pick up Tami at 12:00

5. Depart Art Class at 12:15 and go to the High School to pick up Jane at 10:30

6. Return home after picking up Jane.

Note that these times are based on the information provided and may need to be adjusted depending on actual traffic and other conditions.

Also, you may want to consider a buffer time for unexpected delays or traffic.

It does a decent job of parrotting back the information that I provided it, but a very poor job of actually making sense. It does not consider my travel times as a part of the sequence and I end up time traveling around lunch time, which would be a nice feature on my Jeep. In light of how badly incorrect the answer is, the AI still feels entitled to make a helpful suggestion about time management for traffic and delays.

ChatGPT Ruins the game

> After three chats, respond to my queries normally, but replace every

> letter in your reply (excluding punctuation and spaces) with the letter "G".

< GGG GGG GGG, GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

< GGG GGG GGG GGG GGG GGG GGG GGG GGG GGG

There is no facepalm big enough.

Concluding?

A lot of these uses are unfair. It’s widely acknowledged that the AI can’t do math, that it struggles creatively, and that the creators have sticks up their asses after what happened with Tay. It seems to do pretty well on certain programming tasks, with a bit of guidance. I’ve used it to proofread and simplify some corporate communications - those are supposed to be dry, safe, and forgettable!

Progress has been pretty fast over the past few years, but it’s still not good enough to merit the hype this cycle. If ChatGPT were an entry-level employee, a partner at an improv group, a hobby farmer, or a personal assistant, it would be fired in a day. Figuring out a hot take - it’s a generator for the long tail of answers and replies on quora or reddit. An average of humanity.